Max Tegmark on the Nature of Learning: How Brains Evolve to Think and How AI Follows Suit

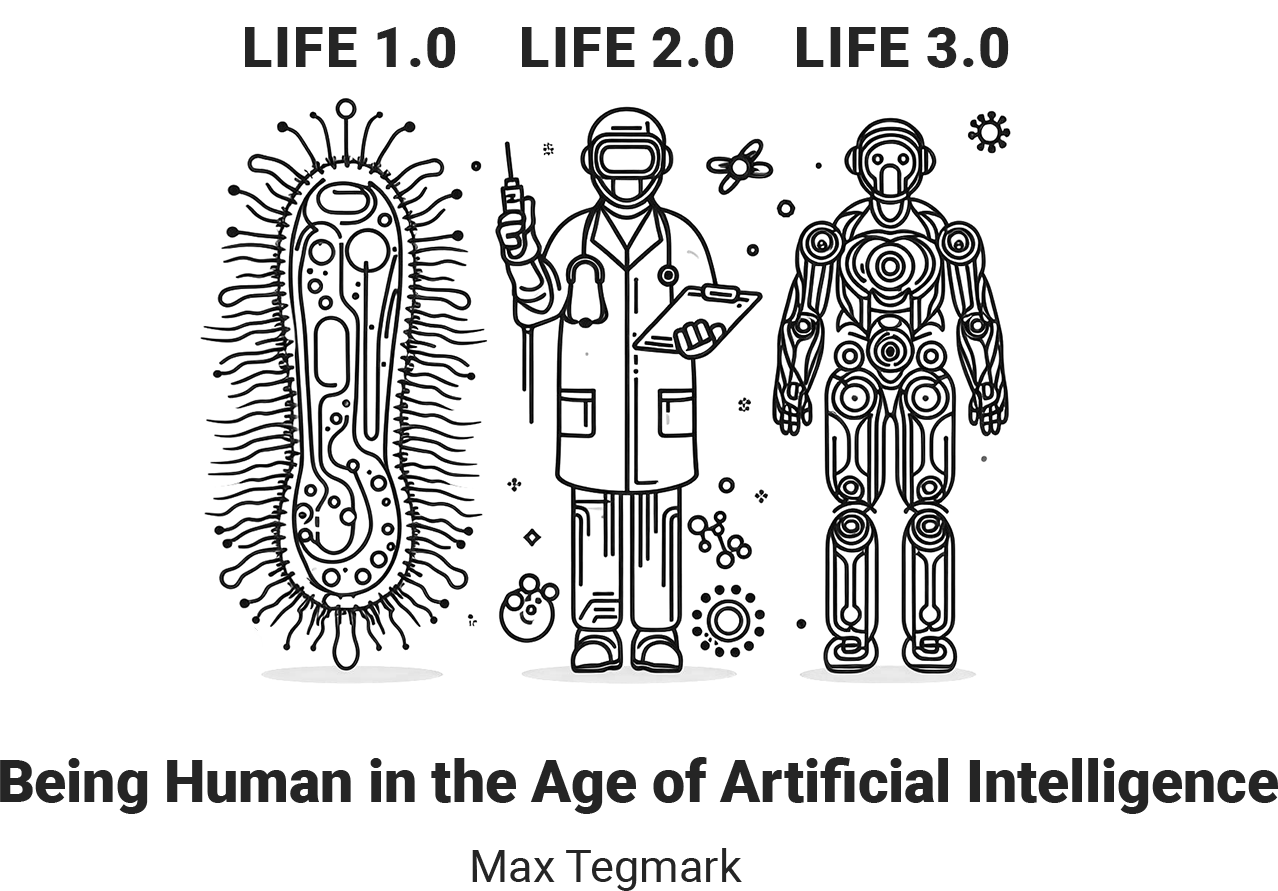

After reading Max Tegmark's book "Life 3.0", I discovered an intriguing classification of intellectual development that the author proposes and calls "lives". This concept provides an interesting perspective on the evolution of artificial intelligence and its impact on the future of humanity.

At the first level, "Life 1.0", intellect at the level of biological organisms such as bacteria that act according to predetermined programs and instincts is considered.

At the level of "Life 2.0", which is characteristic of modern humans, we see the possession of the ability to learn, create new ideas, as well as the possession of consciousness and self-awareness.

However, the most exciting part is "Life 3.0", implying the onset of the era of superintelligence. This is the level at which intellect is capable not only of learning but also of independently influencing its development and environment. Here, questions arise about how to ensure the safe and ethical development of such intelligence, as well as how it can affect the self-determination of humanity.

After carefully reading the book, the reader encounters an interesting consideration of the learning process proposed by the author. The text raises the question of how physical systems, including artificial neural networks and the human brain, are able to learn and adapt to a changing environment.

Although a pocket calculator, as the author notes, can surpass a person in the speed of performing arithmetic calculations, it is not able to improve its performance in the course of practice. This contrast is emphasized by the example of a chess program, which, although it defeats a person in chess, cannot learn from its mistakes and performs the same programmed actions every time it evaluates the next move.

The ability to learn is considered a fundamental characteristic of strong intelligence. We have already seen how a seemingly meaningless piece of lifeless matter turns out to be able to remember and calculate, but how can it learn? We have seen that finding the answer to a difficult question involves calculating some functions, and suitably organized matter can calculate any computable function. When we humans first created pocket calculators and chess programs, we somehow organized matter in a certain way. And now, in order to learn, this matter simply must, following the laws of physics, reorganize itself, becoming better and better at calculating the necessary functions.

How to make AI

By Pop Art Studio | 02.01.2022

This guide offers a start-to-finish framework for creating an artificial intelligence project. It covers defining goals, choosing AI types and programming languages, selecting development platforms, crafting smart algorithms, putting together a solid project brief, and outlining technical specifications for developers including system architecture, data ingestion, model training procedures, scalability features, security controls, testing protocols and documentation standards. Following this comprehensive blueprint helps set up an AI project for effective development and long-term success.

To unravel the learning process, let's first look at how a very simple physical system can learn to calculate the sequence of digits of the number π or any other number. We have seen earlier how a hilly surface with many hollows between hills can be used as a storage device: for example, if the x-coordinate of one of the hollows exactly matches π, and there are no other hollows nearby, then by placing a ball at the point with x-coordinate 3, we can see how our system calculates the missing digits after the decimal point, simply by watching how the ball rolls into the hollow.

Now suppose the surface is made of soft clay, originally completely flat like a table. But if some math enthusiasts keep placing balls at the same points with coordinates corresponding to their favorite numbers, hollows will gradually form at these points due to gravity, and over time this clay surface can be used to find out which numbers it has "remembered". In other words, the clay has learned to calculate significant digits of the number π.

Other physical systems, including the brain, can learn much more efficiently, but the idea remains the same. John Hopfield showed that his network of intersecting neurons mentioned above can store many complex memories by simple repetition. This kind of information representation for learning is usually called "training" when it comes to artificial neural networks (as well as animals or humans who need to acquire a certain skill), although words like "experience", "upbringing" or "education" also fit.

In the artificial neural networks that underlie modern artificial intelligence systems, Hebbian learning is usually replaced by more complex rules with less melodious names, such as "backpropagation" or "stochastic gradient descent", but the basic idea remains the same: there is some simple deterministic rule, similar to the law of physics, according to which synapses are updated over time. As if by magic, using this simple rule, a neural network can be trained to perform extremely complex computations if large data sets are used for training. We still do not know exactly what rules the brain uses for learning, but whatever the answer, there is no evidence that these rules violate the laws of physics.

Most digital computers increase their efficiency by breaking down tasks into multiple steps and reusing the same computational modules over and over, and artificial and biological neural networks do the same. The brain has areas that in computer science are called "recurrent neural networks": information in them can move in different directions, and what served as output at the previous stage can become input at the next one - this distinguishes them from feedforward.

The logic gate network in a laptop microprocessor is also recurrent in this sense: it continues to use already processed information while allowing new information to enter - from the keyboard, trackpad, camera, etc., which also has the right to influence the current calculations, which in turn determine how information will be output: to the monitor, speakers, printer or via wireless network. Similarly, the neural network in your brain is recurrent because it receives information from your eyes, ears, and other sense organs, and allows this information to influence the current calculations, which in turn determine how the results will be output to your muscles.

The history of learning is as long as the history of life itself, since every self-reproducing organism somehow copies and processes information, that is, had to learn how to behave. However, in the era of "Life 1.0", organisms did not learn during their lifetime: the ways of processing information and responding were determined by the DNA passed on to the organism, so learning took place slowly at the species level through Darwinian evolution from generation to generation.

About half a billion years ago, some gene lines here on Earth paved the way for the emergence of animals with neural networks, giving such animals the ability to change their behavior by learning from experience during their lifetime. When Life 2.0 appeared, it won the competition of species and spread across the planet like wildfire thanks to its ability to learn much faster. In the first chapter, we already learned that life has gradually improved its ability to learn, and has done so at an ever-increasing rate.

In one species of primates, the brain turned out to be so well adapted to learning that they learned to use various tools, speak, shoot, and created an advanced society that spread around the world. This society itself can be seen as a system that remembers, calculates, and learns, and it does all this at an ever-increasing rate, since one invention leads to another: writing, printing, modern science, computers, the Internet, and so on. What will be the next inventions that future historians will add to this list of accelerating learning?

As we all know, the avalanche of technical achievements that improve computer memory and increase computing power has led to impressive advances in artificial intelligence, but it took machine learning a long time to mature. When IBM's Deep Blue computer defeated world chess champion Garry Kasparov in 1997, its main advantages were memory and the ability to calculate quickly and accurately - not the ability to learn. Its computational intellect was created by a team of people, and the key reason why Deep Blue was able to defeat its creators was its ability to calculate faster, which allowed it to analyze more new positions on the chessboard. When IBM's Watson computer surpassed the human who was the strongest in the game of Jeopardy!, it too relied not on learning but on specially programmed skills and superior memory and speed. The same can be said for all breakthrough technologies in robotics, from self-balancing vehicles to unmanned cars and rockets that can automatically land.

In contrast, machine learning has been the driving force behind many recent advances in artificial intelligence. Look, for example, at this photo. You will instantly guess what it shows, but writing a function that takes the color of each pixel of the image as input and gives an accurate description of the photo as output, for example: "A group of young people playing frisbee," took decades of effort from artificial intelligence researchers around the world. And only Google's team managed to do it in 2014. If you enter another set of pixels, the output will be: “A herd of elephants walking across a dry grassy field” - the answer will again be accurate. How did they do it? By manual programming, as Deep Blue did, creating each algorithm that recognizes frisbee play, faces, and the like separately? No, they created a relatively simple neural network that initially had no knowledge of the physical world and its components, and then allowed it to learn by providing it with a huge amount of information.

In 2004, famous publisher Jeff Hawkins wrote about artificial intelligence: "No computer can... see as well as a mouse," but those days are long gone.

"A group of people playing frisbee" - the caption generated by this machine for this photo, which knew nothing about people, games, or frisbees.

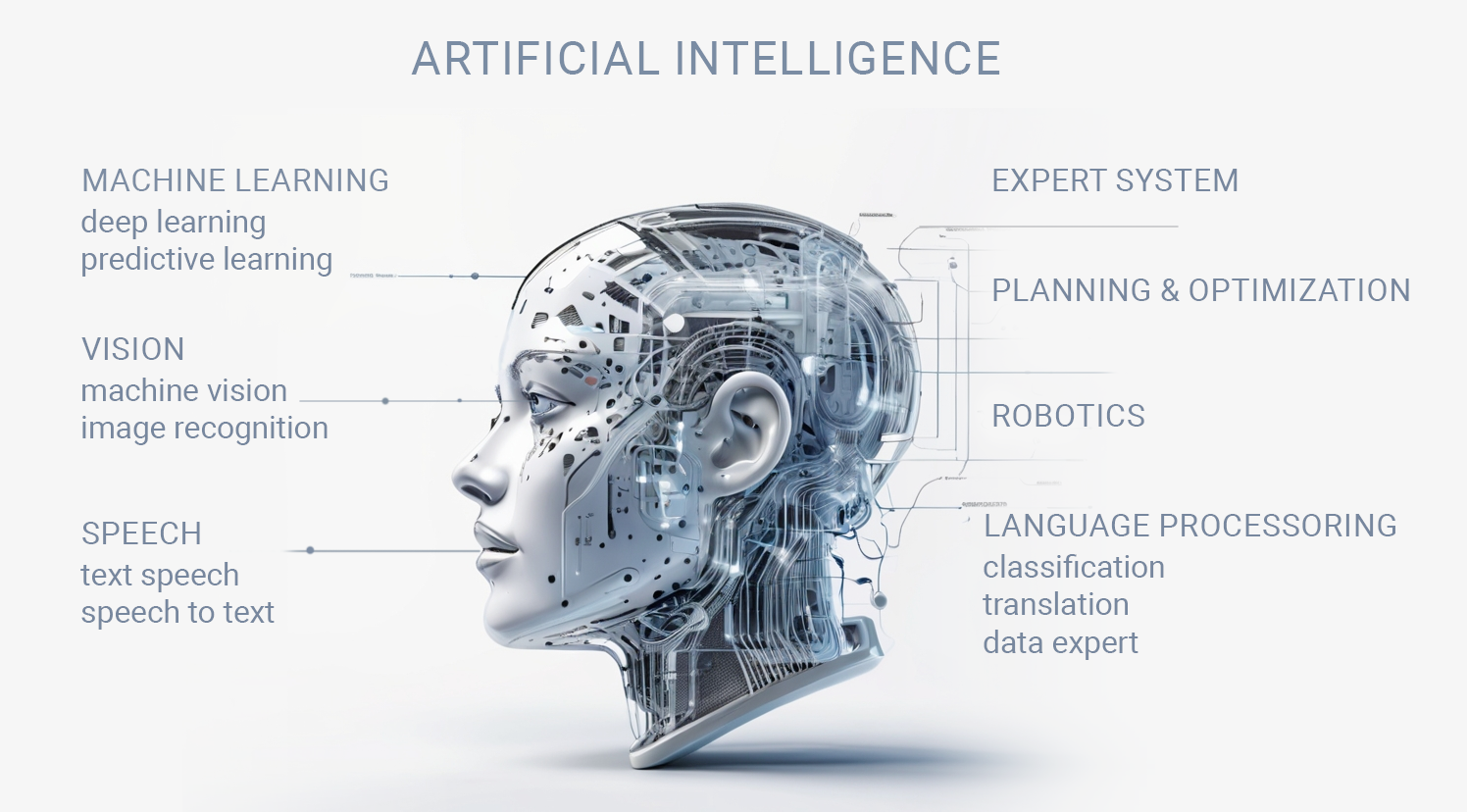

Just as we do not fully understand how our children learn, we still do not fully understand how these neural networks learn and why they sometimes fail. But it is already clear that they will be very useful, which is why deep learning has attracted investors. Thanks to deep learning, approaches to the technical implementation of computer vision have radically changed: from handwriting recognition to real-time video streaming analysis and driverless cars. Thanks to it, there was a revolution in the use of a computer to convert speech to text and translate it into other languages, even in real time, so now we can talk to personal digital assistants like Siri, Google Now, or Cortana. Annoying CAPTCHA puzzles that we have to solve to convince a site that we are human are becoming more and more difficult to outperform machine learning technologies. In 2015, Google DeepMind released an artificial intelligence that used deep learning to master dozens of different computer games just like a child - that is, without using instructions, with the only difference being that it learned to play better than any human.

In 2016, the same company released AlphaGo, a computer system for the game of Go, which used deep learning to evaluate positional advantages so accurately that it defeated the world's strongest player. This success serves as positive feedback, attracting more funding and more talented youth to artificial intelligence research, leading to new successes.

How long will it take machines to surpass us in solving all cognitive tasks? We don't know, and we must be prepared for the answer to be "never." However, the goal is to prepare for it to happen anyway, perhaps even in our lifetime. Because matter can be organized in such a way that, obeying the laws of physics, it remembers, calculates, and learns - and matter does not necessarily have to be biological.

Artificial intelligence researchers are often accused of over-promising and under-delivering, but in fairness, many such critics also have dull records. Some of them are just playing with words, at one point defining intelligence as something computers still cannot do, and then as something that will impress us the most. Computers have now become very good, or even superior in arithmetic, chess, mathematical theorem proving, stock picking, pattern recognition, driving, arcade games, go, speech synthesis, speech-to-text conversion, translation, and even cancer diagnosis, but another critic just snorts contemptuously: "Of course, you don't need a real mind for that!" - and will continue to argue that real intelligence must reach the peaks of Moravec's landscape that are not yet submerged in water, like those people in the past who argued that a machine was not up to subtitles or go - while the water continued to rise.

Assuming the water will continue to rise for at least some time, it is reasonable to assume that the impact of artificial intelligence on society will continue to grow.

Deep learning has become a driver of technical progress in various fields. Computer vision, speech recognition, and language translation processes have moved to a new level thanks to this technology. The ability of machine learning to quickly adapt to new data and scenarios has led to the creation of systems capable of recognizing objects in real time, giving rise to driverless cars and smart homes.

Projects like Google DeepMind and AlphaGo demonstrate how deep learning leads to the creation of artificial intelligence capable not only of adapting but also of surpassing human abilities in complex tasks such as the game of go. These achievements attract the attention of investors and talented researchers, stimulating further progress in the field of artificial intelligence.

The author also draws attention to the discussions in the field of artificial intelligence, where critics often raise questions about how successful artificial intelligences have already become in various areas, but at the same time continue to challenge their true intellectual status. He warns against a dismissive attitude towards the achievements of artificial intelligence and notes that it is necessary to recognize their successes, even if they differ from what we usually associate with human intelligence.

Max Tegmark's book "Life 3.0", published in 2019, left a deep mark on our perception of artificial intelligence. Since then, a few years have passed, and during this time the integration of artificial intelligence into everyday life has only intensified.

Natural language processing systems like ChatGPT have become more sophisticated and widely used to enhance human-computer interaction. Google Bard and Microsoft Bing, backed by advanced artificial intelligence technologies, continue to improve search query quality and provide more accurate results.

All these changes emphasize the constant growth of the influence of artificial intelligence on our daily lives. It not only transforms the ways we interact with technology but also becomes an indispensable tool in various fields, enriching and improving the quality of our existence.

Elon Musk's radically angular Cybertruck pickup shocked audiences in 2019 with its geometric stainless steel body evoking sci-fi movies and 80s cars. Its unconventional form draws criticism along with praise for its boldly futuristic look. Behind the origami-esque styling lies a highly capable electric truck with armored doors, adaptive air suspension, and up to 500km range. But with pickup buyers traditionally loyal to brands like Ford and RAM, does the Cybertruck's divisive design represent an innovation that will disrupt and transform the market or an alienating bust?

Elon Musk's radically angular Cybertruck pickup shocked audiences in 2019 with its geometric stainless steel body evoking sci-fi movies and 80s cars. Its unconventional form draws criticism along with praise for its boldly futuristic look. Behind the origami-esque styling lies a highly capable electric truck with armored doors, adaptive air suspension, and up to 500km range. But with pickup buyers traditionally loyal to brands like Ford and RAM, does the Cybertruck's divisive design represent an innovation that will disrupt and transform the market or an alienating bust?

Explore how neuromarketing reveals the brain's role in purchase decisions based on pleasure and suffering. Learn about the endowment effect and its intriguing parallels, as well as the diminishing marginal utility of wealth. Uncover key factors influencing buying choices and their implications for marketing.

Explore how neuromarketing reveals the brain's role in purchase decisions based on pleasure and suffering. Learn about the endowment effect and its intriguing parallels, as well as the diminishing marginal utility of wealth. Uncover key factors influencing buying choices and their implications for marketing.

In the excerpt below, the author analyzes various aspects of this problem, including an AI's understanding of human goals, adoption of these goals, and retention of them during self-learning and self-improvement. Tegmark points out the complexity of each of these components and argues that to safely create a superintelligent AI, solutions to this multifaceted problem must be found in advance, before its emergence.

In the excerpt below, the author analyzes various aspects of this problem, including an AI's understanding of human goals, adoption of these goals, and retention of them during self-learning and self-improvement. Tegmark points out the complexity of each of these components and argues that to safely create a superintelligent AI, solutions to this multifaceted problem must be found in advance, before its emergence.  Dive into the marketing odyssey of "Greek Peak," a ski haven sculpted by the expertise of Richard Thaler, a maestro in behavioral economics. Through calculated pricing crescendos, the resort danced with gradual increments, ensuring customer harmony. Thaler's insights fueled service crescendos, with free offerings and tailored passes for locals and students.

Dive into the marketing odyssey of "Greek Peak," a ski haven sculpted by the expertise of Richard Thaler, a maestro in behavioral economics. Through calculated pricing crescendos, the resort danced with gradual increments, ensuring customer harmony. Thaler's insights fueled service crescendos, with free offerings and tailored passes for locals and students.  YouTube's origin traces back to three individuals from the PayPal mafia: Chad Hurley, Jawed Karim, and Steve Chen. After eBay acquired PayPal, they sought a new venture. Chad, an artist turned IT enthusiast, designed PayPal's logo. Steven, a Taiwanese math graduate, owned his tech company. Jawed, born in GDR, created an autonomous messaging system.

Inspired by the 2004 tsunami's lack of online footage, Jawed proposed a video hosting idea. Initially conceived as a dating site, YouTube emerged in 2005. Despite various versions of the creation story, the trio's collaboration birthed the beloved platform.

YouTube's San Bruno, California, roots saw its initial non-commercial phase. Rapidly gaining 9 million daily users, an ad marked its commercial turn. Jawed's 18-20-second zoo video on April 23, 2005, marked the platform's video era. By 2006, significant investment and over 65,000 videos propelled YouTube to global fame, attracting over 100 million daily views.

YouTube's origin traces back to three individuals from the PayPal mafia: Chad Hurley, Jawed Karim, and Steve Chen. After eBay acquired PayPal, they sought a new venture. Chad, an artist turned IT enthusiast, designed PayPal's logo. Steven, a Taiwanese math graduate, owned his tech company. Jawed, born in GDR, created an autonomous messaging system.

Inspired by the 2004 tsunami's lack of online footage, Jawed proposed a video hosting idea. Initially conceived as a dating site, YouTube emerged in 2005. Despite various versions of the creation story, the trio's collaboration birthed the beloved platform.

YouTube's San Bruno, California, roots saw its initial non-commercial phase. Rapidly gaining 9 million daily users, an ad marked its commercial turn. Jawed's 18-20-second zoo video on April 23, 2005, marked the platform's video era. By 2006, significant investment and over 65,000 videos propelled YouTube to global fame, attracting over 100 million daily views.